Dear Technocrats,

Through this post, I am going to explore needs and solutions towards linux administration. In this world of Big Data, the needs of storage and processing is growing day by day. To meet with better storage and processing, recoverability, availability of files, you must understand the architecture of your system/network. Specially for the new learners of Big Data administration, a good knowledge of Linux administration commands is must. For the same cause, I am going to extend this post on time to time basis for better understanding of Linux architecture... First I am starting with some basic commands:

Linux is an open source, multiuser operating system. It is inherited from Unix architecture. Linux has many flavors like Ubuntu, mint, CentOS etc. All these flavors have their versions which are updated on timely basis. To work on Linux we must be handy with commands described underneath:

[The commands will run on terminal, you can open it via (ctrl+alt+T)]

After opening the terminal, type the commands on the terminal as shown below and the result.

There are some

network related commands eg. ifconfig, arp, route, finger, traceroute etc. try to run all these on your terminal and see the results as shown in the image below:

Updating path variable:

Setting path in Ubuntu can be either permanent or temporary. For making permanent entry for a path, update it with existing entries in /etc/environment file. add your new path after putting : before adding new path within " " braces of path variable. If you want to add temporarily, you can do it directly using terminal. We have taken an example of adding path where earlier path has been removed. So we are updating path variable as shown:

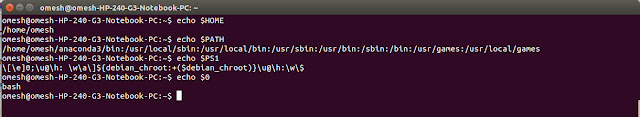

Home directory:

There are more than one home directory in Linux. the home of root ($ cd /), and the home of user ($ cd ~) from which you are logged in to the system. The user credentials come inside home subdirectory of root. To understand the location of user home directory inside your system follow the command given in the screen below:

Adding and Removing user account:

Linux a multiuser operating system. So in next steps you will learn how to add and remove user accounts from a Linux terminal:

File Permissions:

Linux has file permissions rwx (read, write, execute) in three pairs for (owner, group, and others).

There are total identifiers, first bit show whether the element is directory (d) or file (-). Rest 9 identifiers are in the group of 3 permissions (rwx) for owner, group, and others respectively. Have a look on the screen-shot of file permissions:

Note: by default permission bits of a file when it is created first time is controlled by

umask. By default umask for files is 666 and directories is 777.

Editors: (vi, vim, etc...)

Working on editors in Linux also needs knowledge of its modes. vi or vim are the editors of Linux. In Linux editor works in two modes: Command mode, and Insert mode. By default we enter in command mode when we open/create any file using (vim file_name). To write anything in this file just press "i" or "a" to enter in Insert mode. Now you can write whatever you want. After finishing writting press "esc" to come out of write mode and enter in command mode again. Now to just quit write ":q", to save/write and quit ":wq" and to quit without saving file ":q!" and press "enter". You will be back to your terminal. Happy Editing...

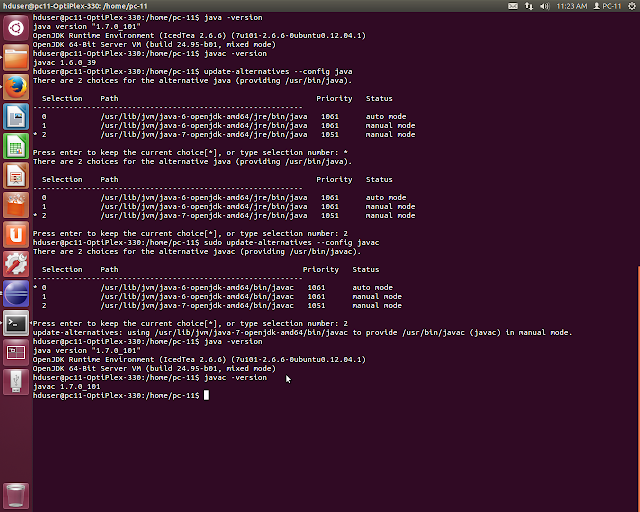

Setting up JAVA and JAVAC version:

If we are having more than one java versions in our system there may be conflict in java or javac. To keep all these synchronize follow the commands as shown in the figure below:

grep utility: grep is one of the most used searching utility in unix environment. grep enables the user to perform various customized searchings as shown:

For more specific commands related to Linux administration and networking, the blog will be updated on regular basis. For shell scripting visit this

post

For frequent updates on

Big Data Analytics keep visiting this blog or like at this

page .